Go Concurrency Patterns

Why I Wrote This

I’ve been writing Go for a while now — at work for CLI tools, CI pipelines, and infrastructure tooling. Concurrency is the feature that drew me to the language, and it’s also the one that bit me the most when I didn’t understand it properly.

Over time, I’ve collected a mental catalog of concurrency patterns — some from Rob Pike’s original talks, some from books, and some from debugging my own code at 11 PM. This post is that catalog, written down so I can reference it and hopefully help others avoid the same mistakes.

Concurrency vs Parallelism

This distinction tripped me up early on. They’re not the same thing.

Concurrency is about structure — organizing code to handle multiple tasks by switching between them. Think of a juggler keeping several balls in the air. They’re not touching all balls at once, but they manage all of them.

Parallelism is about execution — literally doing multiple things at the same time on multiple CPU cores. Multiple jugglers, each with their own balls.

Go gives you concurrency primitives (goroutines and channels), and the runtime decides when to run things in parallel based on available cores. You write concurrent code; the scheduler figures out the parallelism.

The Building Blocks

Two things make Go’s concurrency model work: goroutines and channels.

Goroutines are lightweight threads managed by the Go runtime. I can spin up thousands of them without worrying about memory — each one starts at just a few KB of stack space. At work, we have had programs running 10,000+ goroutines without breaking a sweat.

Channels are how goroutines communicate. The <- operator sends and receives data through them. They come in two flavors:

- Unbuffered channels: Both sides must be ready at the same time — like a baton handoff in a relay race. This is the default and the one I use most.

- Buffered channels (

ch := make(chan int, 10)): The sender can drop off values until the buffer is full. Useful when you want some slack between producer and consumer.

The rule I follow: start with unbuffered channels, add buffering only when you have a specific reason.

Fundamental Patterns

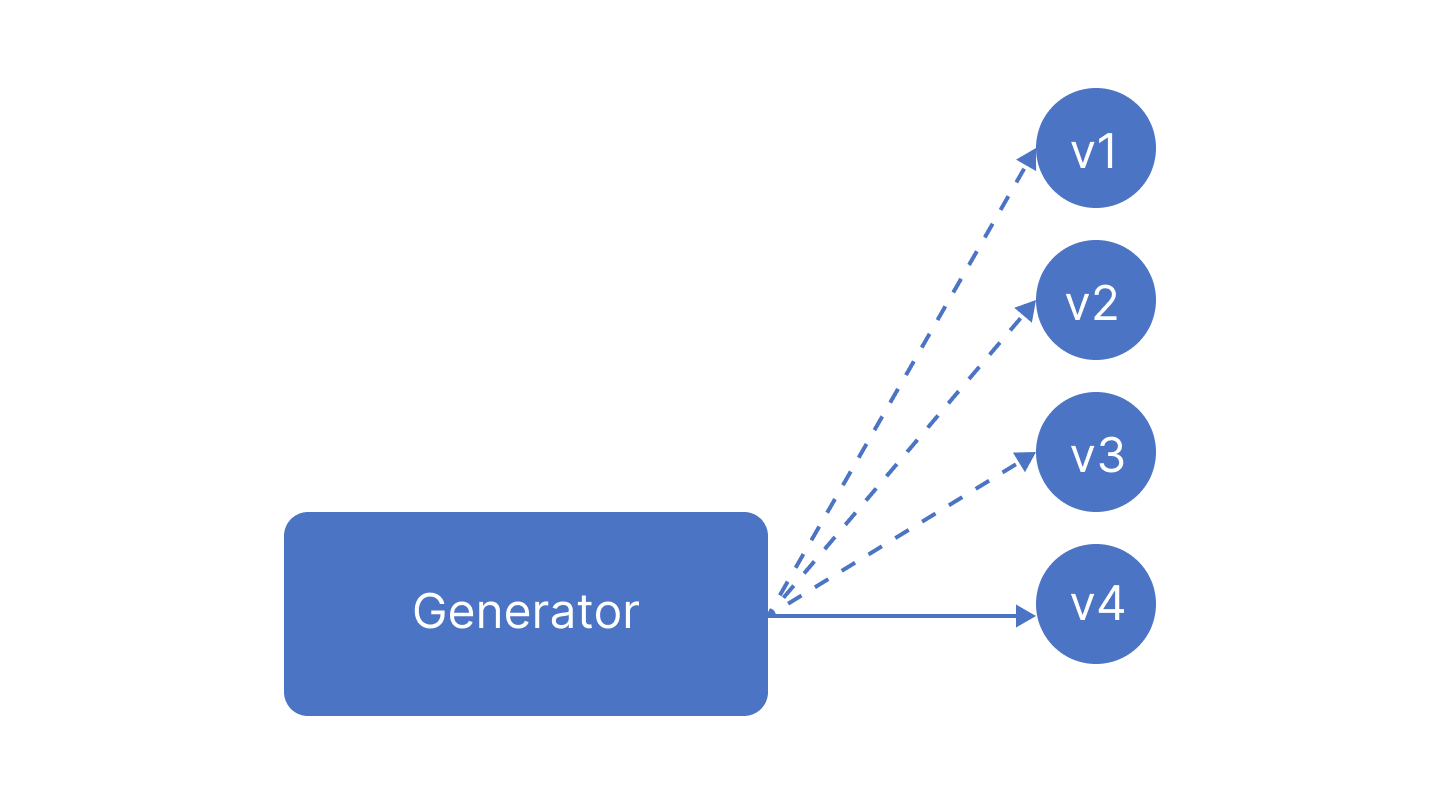

1. Generator

The generator is the pattern I reach for most often. A function spins up a goroutine, returns a channel, and lazily produces values over time. The consumer pulls values when it’s ready — no need to load everything into memory upfront.

func generator() <-chan int {

ch := make(chan int)

go func() {

for i := 0; ; i++ {

ch <- i

}

}()

return ch

}I first used this when I needed to stream log lines from multiple files concurrently. Instead of reading entire files into memory, each file got its own generator that emitted lines on demand.

Where it’s useful: streaming data, custom iterators, simulating real-time feeds, and as the first stage in a pipeline.

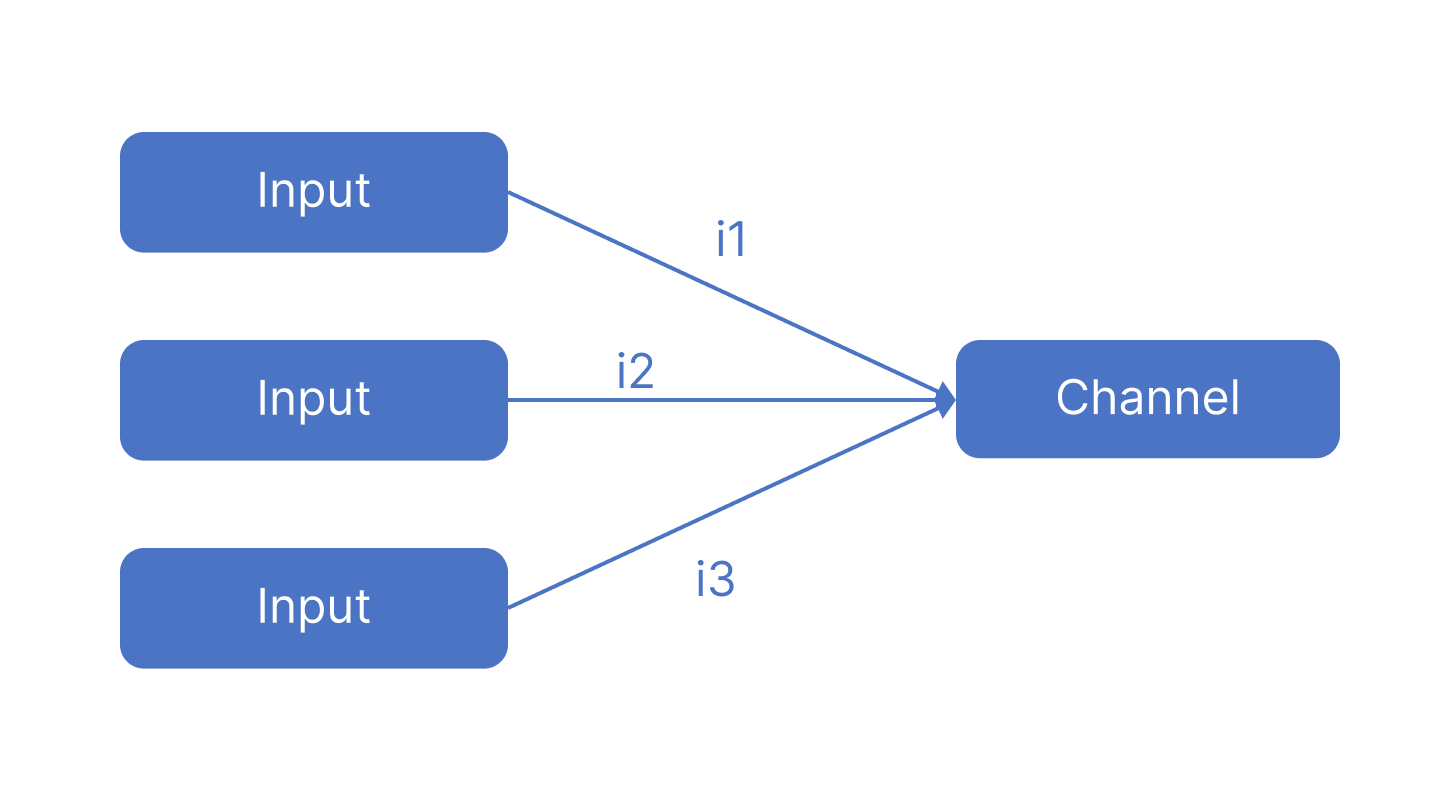

2. Fan-In

Fan-in merges multiple input channels into a single output channel. It’s a multiplexer — one consumer, many producers.

The subtle part I got wrong initially: the order of values on the output channel is not guaranteed. Whichever input channel has a value ready first gets forwarded. This matters if you care about ordering.

func fanIn(input1, input2 <-chan int, output chan<- int) {

defer close(output)

var closed1, closed2 bool

for !closed1 || !closed2 {

select {

case i1, ok1 := <-input1:

if ok1 {

output <- i1

} else {

closed1 = true

input1 = nil // Prevent busy-looping on closed channel

}

case i2, ok2 := <-input2:

if ok2 {

output <- i2

} else {

closed2 = true

input2 = nil // Prevent busy-looping on closed channel

}

}

}

}The input1 = nil trick is worth noting — setting a closed channel to nil makes select skip it entirely, which prevents a busy-loop. I learned this after burning CPU cycles on a tight loop that kept receiving zero values from a closed channel.

Where it’s useful: aggregating API responses, collecting results from parallel workers, merging event streams.

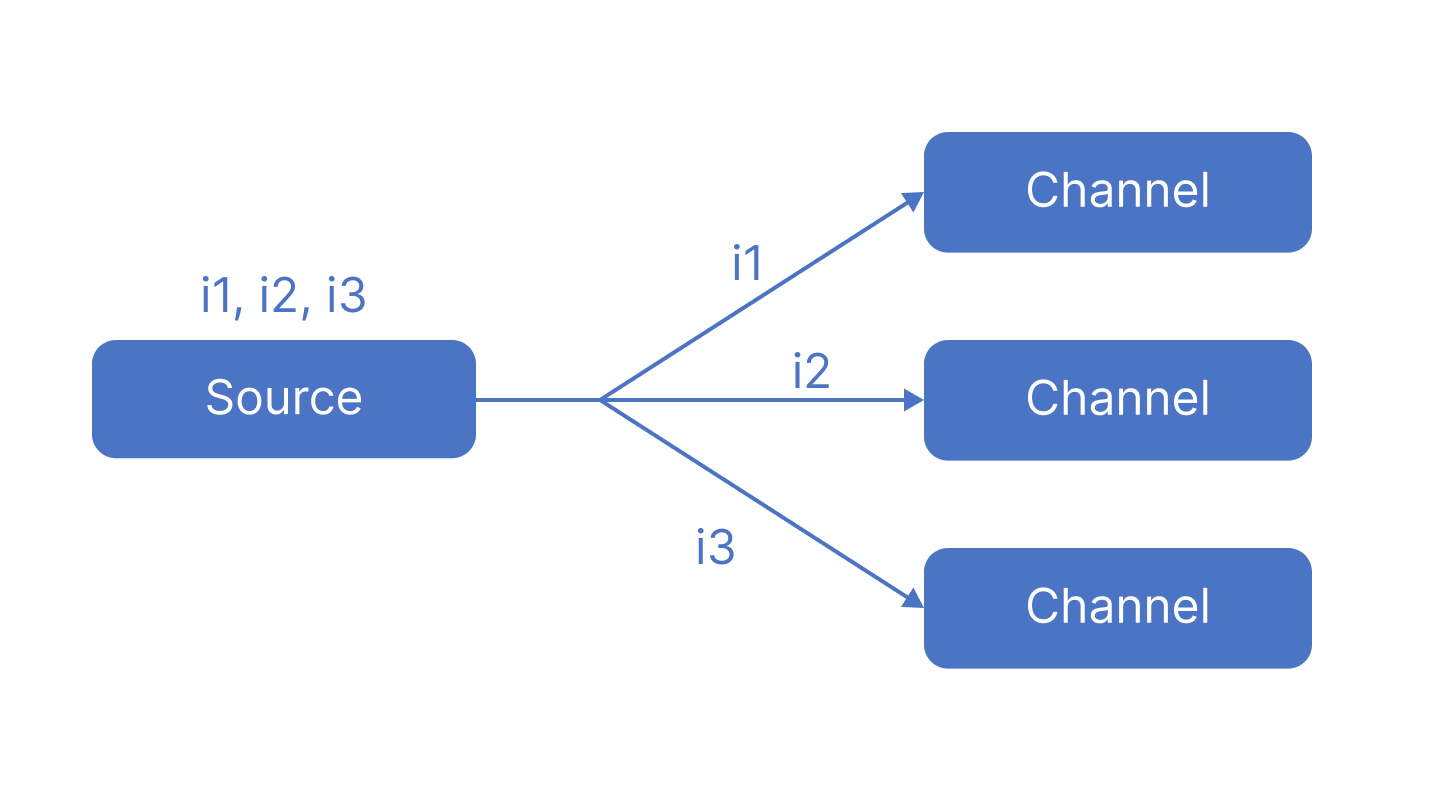

3. Fan-Out

Fan-out is the opposite — distributing work from one source to multiple workers. The simplest version is just having multiple goroutines read from the same channel. Go’s channel semantics guarantee that each value is received by exactly one goroutine.

func fanOut(input <-chan int) <-chan int {

output := make(chan int)

go func() {

defer close(output)

for data := range input {

output <- data

}

}()

return output

}

func main() {

...

out1 := fanOut(in)

out2 := fanOut(in)

...

}I use fan-out + fan-in together all the time. At work, I built a tool that runs test suites concurrently — fan-out distributes test cases to worker goroutines, and fan-in collects the results back into a single stream.

Where it’s useful: parallel task processing, distributing requests across workers, load balancing.

4. Pipeline

graph LR

Gen[generate] -->|ch| Even[even]

Even -->|evenOut| Sq[sq]

Sq -->|sqOut| Consumer[consumer]Pipelines chain processing stages together with channels. Each stage reads from an input channel, does some work, and writes to an output channel. It’s functional programming meets concurrency.

func main() {

ch := generate(1, 2, 3, 4, 5)

evenOut := even(ch)

sqOut := sq(evenOut)

...

}What I like about this pattern is how composable it is. Each stage is an independent function — I can test them in isolation, swap them out, or add new stages without touching the others.

Where it’s useful: data transformation chains, stream processing, request handling (auth → parse → process → respond).

5. Worker Pool

graph LR

Producer[Producer] -->|jobs| W1[Worker 1]

Producer -->|jobs| W2[Worker 2]

Producer -->|jobs| W3[Worker 3]

W1 -->|results| Consumer[Consumer]

W2 -->|results| Consumer

W3 -->|results| ConsumerThis is the pattern I use when I have a lot of tasks and need to limit how many run at once. A fixed number of workers pull tasks from a shared channel.

func worker(id int, jobs <-chan int, results chan<- int) {

for j := range jobs {

results <- j * 2

}

}

func main() {

const numJobs = 6

jobs := make(chan int, numJobs)

results := make(chan int, numJobs)

for w := range 3 {

go worker(w, jobs, results)

}

for j := range numJobs {

jobs <- j

}

close(jobs)

for range numJobs {

<-results

}

}I learned the hard way that spawning a goroutine per task doesn’t scale indefinitely. When I was processing thousands of files concurrently, the system ran out of file descriptors. A worker pool with 50 workers solved it — same throughput, no resource exhaustion.

Where it’s useful: batch processing, handling web requests, any scenario where unbounded concurrency would exhaust resources.

6. Queuing (Semaphore)

graph LR

T1[Task 1] --> Q[Buffered Channel<br/>capacity = limit]

T2[Task 2] --> Q

T3[Task 3] --> Q

T4[Task ...] --> Q

Q --> G1[Goroutine]

Q --> G2[Goroutine]Similar to worker pools, but implemented differently. A buffered channel acts as a semaphore — acquiring a slot before starting work, releasing it when done.

func process(w int, queue chan struct{}, wg *sync.WaitGroup) {

queue <- struct{}{} // Acquire a slot

go func() {

defer wg.Done()

... // Perform tasks

<-queue // Release the slot

}()

}

func main() {

limit, work := 2, 8

var wg sync.WaitGroup

queue := make(chan struct{}, limit) // Buffered channel with a capacity of `limit`

wg.Add(work)

for w := range work {

process(w, queue, &wg)

}

wg.Wait()

close(queue)

}The difference from worker pools: here, each task gets its own goroutine, but the buffered channel caps how many can be active simultaneously. I prefer this when the tasks are heterogeneous and don’t fit neatly into a “pull from queue” model.

Where it’s useful: rate limiting, controlling concurrent API calls, throttling I/O-heavy operations.

Advanced Patterns

1. Tee Channel

graph LR

In[Input Channel] --> Tee[tee]

Tee --> Out1[Output 1<br/>Processing]

Tee --> Out2[Output 2<br/>Logging]Named after the Unix tee command — it duplicates a stream into two. One input channel, two output channels, both get every value.

func tee(in <-chan int) (chan int, chan int) {

out1, out2 := make(chan int), make(chan int)

go func() {

defer close(out1)

defer close(out2)

for val := range in {

out1 <- val

out2 <- val

}

}()

return out1, out2

}I’ve used this to split a data stream so one copy goes to the main processing pipeline and the other goes to a logger. The gotcha with unbuffered channels is that both receivers must be ready — if one is slow, it blocks the other. Adding small buffers helps if that’s a concern.

Where it’s useful: mirroring data to logging/monitoring, dual processing pipelines, debugging without interrupting the main flow.

2. Bridge Channel

graph LR

Ch1[Channel 1] --> Bridge[bridge]

Ch2[Channel 2] --> Bridge

Ch3[Channel 3] --> Bridge

Bridge --> Out[Single Output]Bridge flattens multiple channels into one. When you have a function that produces channels of channels (<-chan <-chan T), bridge collapses that into a single consumable stream.

func bridge(inputs ...<-chan int) <-chan int {

out := make(chan int)

var wg sync.WaitGroup

for _, ch := range inputs {

wg.Add(1)

go func(c <-chan int) {

defer wg.Done()

for v := range c {

out <- v

}

}(ch)

}

go func() { wg.Wait(); close(out) }()

return out

}Where it’s useful: dynamic data sources, simplifying nested channel structures, aggregating results from varying numbers of producers.

3. Ring Buffer Channel

graph LR

In[Fast Producer] --> RB[Buffered Channel<br/>size = N]

RB --> Out[Consumer]

RB -.->|full?| Drop[Discard Oldest]

Drop -.-> RBA fixed-size buffer where new values overwrite the oldest ones when full. Useful when you only care about the most recent data.

func ringBuffer(in <-chan int, size int) <-chan int {

out := make(chan int, size)

go func() {

defer close(out)

for v := range in {

select {

case out <- v:

default:

<-out // Discard oldest

out <- v // Make room for newest

}

}

}()

return out

}The key insight: the buffered channel is the ring buffer. When it’s full, select hits the default case — we drain the oldest value and push the new one in.

The trade-off is explicit: you lose old data to keep the system flowing.

Where it’s useful: maintaining recent event history, throttling fast producers, bounded queues where old data is expendable.

4. Bounded Parallelism

graph LR

Work[N Tasks] -->|jobs channel| W1[Worker 1]

Work -->|jobs channel| W2[Worker 2]

Work -->|jobs channel| WM[Worker M]

W1 --> Done[wg.Wait]

W2 --> Done

WM --> DoneA clean pattern for “do these N things concurrently, but never more than M at a time”:

func boundedParallel(works []int, parallelism int, fn func(int)) {

var wg sync.WaitGroup

jobs := make(chan int, len(works))

// Start workers

wg.Add(parallelism)

for range parallelism {

go func() {

defer wg.Done()

for job := range jobs {

fn(job)

}

}()

}

// Send work to workers

for _, work := range works {

jobs <- work

}

close(jobs)

wg.Wait()

}This is essentially a worker pool wrapped in a reusable function. The parallelism parameter makes it easy to tune based on the workload.

Where it’s useful: preventing resource exhaustion, respecting API rate limits, controlling concurrent I/O.

Essential Patterns from the Standard Library

1. Context

The context package is one of those things I didn’t appreciate until I needed it. It provides a standardized way to carry deadlines, cancellation signals, and request-scoped values through a chain of goroutines.

The key insight: contexts form a tree. When you cancel a parent, all children get cancelled too. This is how you do graceful shutdown across dozens of goroutines.

graph TD

Root(Root Context)

Root --> WTC[WithTimeout / WithCancel]

WTC --> Derived[Derived Context]

Derived --> G1(Goroutine 1)

Derived --> G2(Goroutine 2)

Root --> WV[WithValue]

WV --> Fn[Function Call]

Root -.->|cancel| Derived

Derived -.->|cancel propagates| G1

Derived -.->|cancel propagates| G2The main tools:

WithCancel(): manual cancellationWithTimeout()/WithDeadline(): automatic cancellation after a durationWithValue(): attach request-scoped data (use sparingly)

func worker(ctx context.Context, id int) {

for {

select {

case <-ctx.Done():

fmt.Printf("Worker %d: shutting down\n", id)

return

default:

fmt.Printf("Worker %d: working...\n", id)

time.Sleep(500 * time.Millisecond)

}

}

}

func main() {

ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second)

defer cancel()

for i := range 3 {

go worker(ctx, i)

}

time.Sleep(3 * time.Second)

fmt.Println("Main: exiting")

}I use this heavily in CLI tools — when a user hits Ctrl+C, the signal cancels the root context, which propagates down to every goroutine doing API calls or waiting on I/O. Without it, you’d need to manually track and stop each one.

Where it’s useful: request lifecycle management in servers, passing auth tokens and trace IDs, ensuring cleanup with defer cancel().

2. Errgroup

errgroup (from golang.org/x/sync) is what I reach for instead of sync.WaitGroup when goroutines can fail. It waits for all goroutines to finish and returns the first error encountered. If you create it with errgroup.WithContext, it also cancels the context on the first error — so other goroutines can bail out early.

func main() {

var g errgroup.Group

urls := []string{

"https://google.com",

"https://tasnimzotder.com",

"https://invalid-url",

}

for _, url := range urls {

g.Go(func() error {

return fetchData(url)

})

}

if err := g.Wait(); err != nil {

fmt.Println("Error fetching URLs:", err)

} else {

fmt.Println("Successfully fetched all URLs")

}

}Where it’s useful: concurrent API calls where any failure should abort the rest, parallel data processing with error aggregation.

3. Rate Limiting

graph LR

Refill[Token Refill<br/>steady rate] --> Bucket[Bucket<br/>capacity = burst]

Bucket -->|token available| Allow[Request Allowed]

Bucket -.->|empty| Wait[Request Waits]

Wait -.-> BucketI’ve implemented rate limiting two ways in Go. The simple approach uses time.Tick for a fixed rate:

func main() {

rateLimit := time.Second / 10 // 10 calls per second

requests := make(chan int, 5)

for i := range 5 {

requests <- i

}

close(requests)

throttle := time.Tick(rateLimit)

for req := range requests {

<-throttle

fmt.Println("request", req, time.Now())

}

}The more sophisticated approach uses golang.org/x/time/rate, which implements a token bucket with burst support:

func main() {

// rate limiter: 5 req per second with burst of 10

limiter := rate.NewLimiter(5, 10)

var wg sync.WaitGroup

for i := range 20 {

wg.Add(1)

go func(id int) {

defer wg.Done()

if err := limiter.Wait(context.Background()); err != nil {

fmt.Printf("Rate limiter error: %v\n", err)

return

}

fmt.Printf("Request %d processed at %s\n", id, time.Now())

}(i)

}

wg.Wait()

}The burst parameter is key — it lets you send a quick batch of requests without waiting, then throttles to the sustained rate.

Where it’s useful: protecting APIs from abuse, respecting third-party rate limits, ensuring fair resource usage in multi-tenant systems.

What I’ve Learned

After working with these patterns for a while, a few lessons stand out:

- Start simple: A goroutine and a channel solve most problems. Don’t reach for worker pools or bounded parallelism until you actually need them.

- Always use context: Wire it through everything. The one time you skip it is the time you’ll get a goroutine leak.

- Channels are for communication, mutexes are for state: I used to force channels into every synchronization problem. Sometimes a

sync.Mutexis clearer. - Profile before optimizing: I’ve spent hours tuning worker pool sizes only to find the bottleneck was a database query, not the concurrency model.

- Test with

-race: The Go race detector has caught bugs I never would have found manually. Rungo test -raceon everything.

These patterns aren’t just academic — they’re tools I reach for daily. The trick is knowing which one fits the problem, and that only comes from using them and occasionally getting it wrong.

References

- Advanced go concurrency patterns. https://go.dev/talks/2013/advconc.slide

- Concurrency in go. O’Reilly Online Learning. https://www.oreilly.com/library/view/concurrency-in-go/9781491941294/ch04.html

- Go concurrency patterns. https://go.dev/talks/2012/concurrency.slide

- Go Wiki: LearnConcurrency - the Go programming Language. https://go.dev/wiki/LearnConcurrency

- Mastering Go’s Context: From Basics to Best Practices - Claudiu C. Bogdan. https://claudiuconstantinbogdan.me/articles/go-context

- Advanced Concurrency Patterns | Learn Go. Karan Pratap Singh. https://www.karanpratapsingh.com/courses/go/advanced-concurrency-patterns

- GO Concurrency Patterns: Pipeline. Jose Sitanggang. https://www.josestg.com/posts/concurrency-patterns/go-concurrency-patterns-pipeline/